What are they for?

Have you ever used text-to-image generators and been impressed by the results but wished they could be even better? While many of them can produce surprisingly good outputs with minimal effort, there are advanced image generation techniques you can use to create truly stunning visuals.

In this article, we’ll explore some of these methods and show you how to elevate the quality of your text-to-image generations without requiring any specialized technical knowledge.

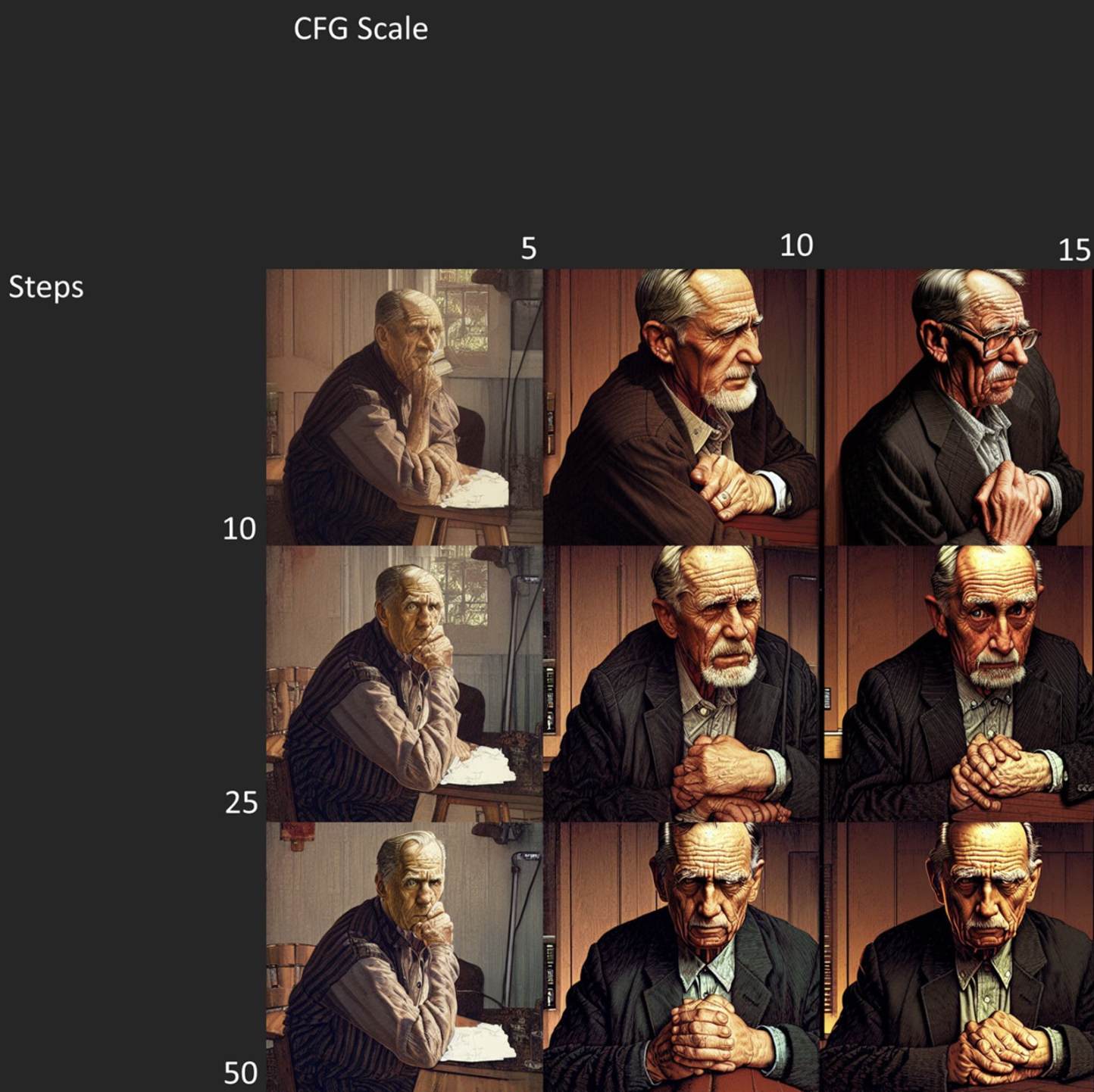

CFG Scale

The CFG Scale, short for “Classifier Free Guidance Scale,” is a key parameter used in image generation algorithms that convert text prompts into images. It determines the degree to which the generated image corresponds to the given text prompt.

A CFG Scale value of 0 produces a more random image, whereas one of 20 generates an image that closely matches the prompt. However, it’s important to note that increasing the CFG scale beyond 15 doesn’t always give better results and may even lead to artifacts in some generated images.

The optimal range for CFG Scale falls between 7 and 13. By achieving the right balance, users can produce high-quality images of the desired level of detail and coherence.

Steps

Adjusting the number of steps in the AI algorithm can impact the level of detail in the generated images. Fewer steps may result in less detail, but this doesn’t always lead to worse results.

When using a large number of steps, such as 40-50 or more, artifacts can appear in the final result, just like with the CFG scale. It’s tempting to use the maximum possible, but it’s not always necessary. The difference in the generated image may not be noticeable after 30-40 steps, depending on the text prompt used.

For simple images, as shown in the comparison, even 25 steps can be sufficient. Therefore, using the optimal number of steps is crucial in achieving the desired level of detail without sacrificing quality.

Negative Prompt

Using a negative prompt is a powerful technique to remove unwanted elements or styles from an image that may not be easily achievable by writing the positive prompt alone. It overrides the unconditional sampling step in the algorithm, directing the diffusion process away from the undesirable elements described in the prompt.

Providing negative prompts allows users to have better control over the image-generation process and get more accurate results that meet their expectations. This technique can help eliminate unwanted visual artifacts or enhance specific features. Thus, it opens up new possibilities for refining the results of the AISixteen algorithm and producing more accurate images.

End Note

Experimenting and fine-tuning the parameters in the AISixteen algorithm for generating images from text prompts can help achieve the best results. However, there is no one-size-fits-all solution, and the ideal parameter settings may vary depending on the text prompt and desired output image. Therefore, adjusting the parameters and experimenting with different combinations is highly recommended.

This process may involve trying different values for the CFG Scale, adjusting the number of steps, and experimenting with other parameters. It is important to closely observe the generated output with each adjustment and make incremental changes. By doing so, users can maximize the potential of the AISixteen algorithm and produce high-quality images from text prompts.

Visit https://aisixteen.com/studio/ and try out yourself!